Can AI replace Alameda Post staff?

There have been lots of reports lately about new Artificial Intelligence (AI) tools that are now available for everyone to use. Maybe you have tried making your social media profile photo more attractive with Lensa [1] or creating an impossible image using MidJourney [2] or Stable Diffusion [3]. Microsoft made a recent announcement that it is investing $10 billion [4] more in the company that created the ChatGPT [5] AI chatbot to incorporate the technology into their search engine, Bing, and their word processor, Word. This kind of investment means these tools will soon be widely available to the public, beyond the beta versions now available for tinkerers and geeks like me.

These Artificial Intelligence tools work by processing huge datasets and classifying the information, styles, and construction of each written or visual work. All this information is aggregated and stored and used as references for prompts made by users to generate a new product. These products are not really “new,” however, as the AI is only capable of synthesizing information contained in its databases—not creating something truly from scratch.

The appeal of many of these tools for business, beyond the wow-factor, is cost-cutting. Having computers do work saves time and money in myriad ways, and is attractive not only to international conglomerates, but to small nonprofit organizations like ours. The choice is prioritizing which is more valuable: the human touch or saving money. No computer algorithm knows Alameda like those who live and work and play here—not yet anyway.

Artificial image generators

I have been playing with some of these tools, trying them for some needs we have at the Post. These artificial intelligence tools are surprisingly capable. With a few short words of direction, I can commission a custom illustration or generate a block of text. This can save hours, if not days, of work—and the results are often quite useful. We have started generating art for our weekly podcast [6] using AI tools, and you can see some of the results below, and in this week’s episode.

[7]

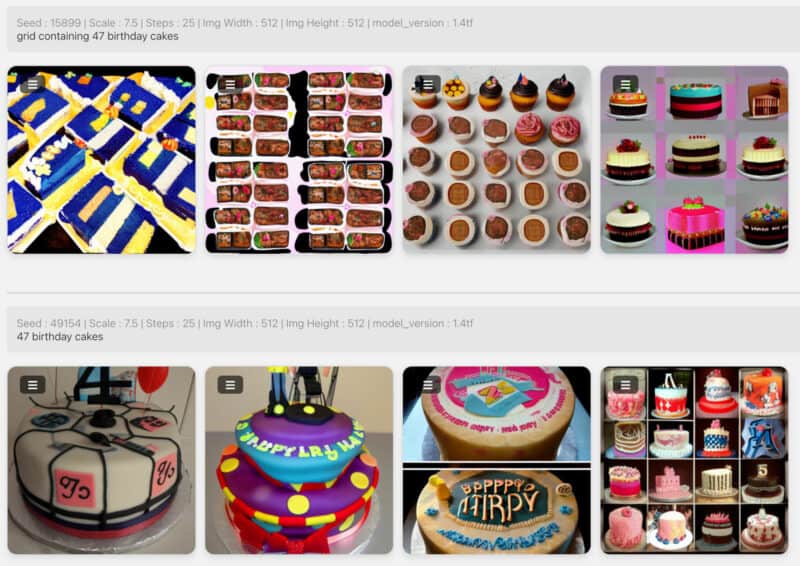

[7]Getting a truly interesting and useful image from one of these tools takes persistence, practice, and a carefully refined vocabulary. Often the results are dreamlike, with indecipherable text and glitches that make computer-generated artwork easy to spot. Other times, the results simply miss the mark. For our story about donations to the Food Bank that were equal to 47 birthday cakes [8], I couldn’t get closer than this to depicting all 47 birthday cakes.

[9]

[9]Many arts communities reject these computer-generated artworks [10], deriding them as derivative of other artists’ works without attribution, as well as sloppy and unartistic. But other organizations have embraced the images and use them regularly, like BoingBoing [11] does to illustrate many of their articles.

Voice and text generators

I recently read an Ars Technica article about a new voice synthesis AI [12] that can take three seconds of a recording of anyone’s voice and then reproduce it with full emotional tone. It will produce audio that mimics the conditions and tones of the original speech and could be used not only to generate entire passages, but also to make corrections when someone misspeaks. I shared the news with our podcaster, whose job is not in jeopardy from this technology. We might be able to use AI to generate episode art, but Scott’s knowledge, humor, empathy, and skill in producing the Alameda PostCast can’t yet be matched by a computer.

Artificial Intelligence text generating tools are causing just as much consternation, because of their ability to provide responses to any queries, in all sorts of formats. You can interact with ChatGPT like it’s a search engine and ask questions. Or you can ask it to generate poetry, lyrics, prose, code, and more. One researcher even got ChatGPT to invent its own language [13]. It responds to questions, refines its answers, and almost appears intelligent, especially with casual interactions.

Overall, ChatGPT is useful with any information that already exists in its databases but lacks any self-awareness or the ability to access new and current information. It can generate 1,000 almost completely accurate words on the history of Alameda, but it can’t tell you about Ke Huy Quan’s acceptance speech at the Golden Globes this week.

Can AI text pass the test?

Academics are astounded by its abilities [14]. In response, some schools and universities–including New York City schools [15]—are banning the use of AI generated work in any form, worried that students have a new way to cheat. Of course, a market for tools to detect it, like for plagiarized work, has been created, but they have mixed results.

As a test, I generated the following paragraph in ChatGPT using the prompt, “Explain in one paragraph why AI will not replace humans in journalism.”

Artificial Intelligence has the potential to assist journalists in tasks such as data analysis and fact-checking, but it is unlikely to replace humans in journalism entirely. This is because journalism is not just about disseminating information, but also about providing context and perspective, which requires human judgment and interpretation. Additionally, AI systems can be biased and lack the ability to understand the nuances and complexities of human society, which are crucial in the field of journalism. Furthermore, ethical and moral considerations also play a major role in journalism and AI cannot match the level of human empathy and emotional intelligence in handling sensitive issues. Therefore, while AI can be a useful tool for journalists, it cannot replace the human element that is essential in the practice of journalism.

When I fed that paragraph into this ChatGPT detector [16], we were told “Our algorithms believe that this content was written by a human.” But the same paragraph fed into the GPTZero detector [17] declared “Your GPTZero score corresponds to the likelihood of the text being AI-generated.”

Is AI worth using?

Nevertheless, the AI-generated paragraph is correct. AI has the potential to be a useful assistant, but it is not truly intelligent enough to replace the value of humans in journalism. When you look at the Google News page, the stories listed are generated by a computer algorithm. And, for the most part, the primary pages have correct, current, and useful news. However, if you search for Alameda, CA [19], the information on the page is only partially about the City of Alameda. There’s also County news and cryptocurrency news, which are not directly relevant. A human editor would know the difference and assign the right stories to the right pages.

I have seen the value in generating quick AI illustrations for non-reporting situations, like our podcast art and other illustrations, especially since we don’t have an illustrator on staff available to us. Other options, especially AI-generated text, make less sense for the Alameda Post and reporting local news. We require human experience, context, and knowledge to understand what to cover and how to cover it. For now, these Artificial Intelligence tools will remain merely as supplements and assistants and cannot replace our human staff.

Update – CNET (a previous employer of mine) disagrees. They are using AI to generate finance [20] articles [20].

Update 2 – Gizmodo’s review shows the articles to be riddled with errors [21], prompting CNET to review all the AI-authored posts.

Adam Gillitt is the Publisher of the Alameda Post [22]. Reach him at [email protected] [23]. His writing is collected at AlamedaPost.com/Adam-Gillitt [24].

Editorials and Letters to the Editor

All opinions expressed on this page are the author's alone and do not reflect those of the Alameda Post, nor does our organization endorse any views the author may present. Our objective as an independent news source is to fully reflect our community's varied opinions without giving preference to a particular viewpoint.

If you disagree with an opinion that we have published, please submit a rebuttal or differing opinion in a letter to the Editor [25] for publication. Review our policies page [26] for more information.